一、结论

-XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap -XX:MaxRAMFraction=2

二、现象&问题

首先排除Java程序的问题,因为基本上Java程序刚运行起来没一会儿,容器就由于OOM被Killed掉了,料想程序还不会写得这么烂。

docker run -m 100MB openjdk:8u121-alpine java -XshowSettings:vm -version

java -XshowSettings:vm -version #查看heap内存

这里发现给容器设置了100MB的memory quota,但JVM运行时实际最大的Heap Size却大于这个值。为啥会这样呢?

JVM默认分配本机 / 容器 内存的大约 25% 作为Max Heap Size

$ java -XX:+PrintFlagsFinal -version | grep -Ei "maxheapsize|maxram"

uintx DefaultMaxRAMFraction = 4 {product}

uintx MaxHeapSize := 8589934592 {product}

uint64_t MaxRAM = 137438953472 {pd product}

uintx MaxRAMFraction = 4 {product}

三、实验&验证

3.1 关于控制jvm head占比 (-XX:MaxRAMFraction)

通过-XX:MaxRAMFraction=1 可以控制jvm heap 占容器 / 主机的内存比例

| MaxRAMFraction | % of RAM for heap |

| -------------- | ----------------- |

| 1 | 100% |

| 2 | 50% |

| 3 | 33% |

| 4 | 25% |

[root@infra03 ~]# docker run --rm --name jdk -it -m 400m cloudnativelab.com/base/openjdk8-alpine:CloudnativeLab java -XX:MaxRAMFraction=1 -XshowSettings:vm -version

VM settings:

Max. Heap Size (Estimated): 386.69M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (IcedTea 3.12.0) (Alpine 8.212.04-r0)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

[root@infra03 ~]# docker run --rm --name jdk -it -m 400m cloudnativelab.com/base/openjdk8-alpine:CloudnativeLab java -XX:MaxRAMFraction=4 -XshowSettings:vm -version

Unable to find image 'cloudnativelab.com/base/openjdk8-alpine:CloudnativeLab' locally

CloudnativeLab: Pulling from base/openjdk8-alpine

Digest: sha256:77dd131330b4fa523164fa194f40d804eda3ff09deb740c80c7d9f92f49ce6b4

Status: Downloaded newer image for cloudnativelab.com/base/openjdk8-alpine:CloudnativeLab

VM settings:

Max. Heap Size (Estimated): 121.81M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (IcedTea 3.12.0) (Alpine 8.212.04-r0)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

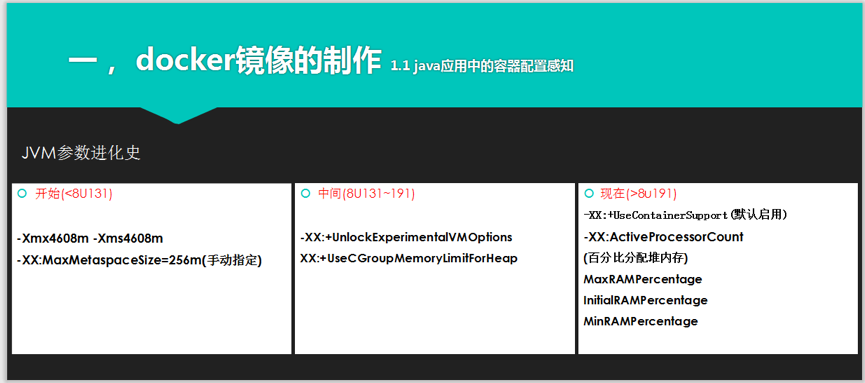

3.2 关于不同JDK版本对应的下的解决方案

Java 10,则使用-XX:+UseContainerSupport打开容器支持就可以了,这时容器中运行的JVM进程取到的系统内存即是施加的memory quota了:

```

[root@infra03 ~]# docker run -m 400MB openjdk:10 java -XX:+UseContainerSupport -XX:InitialRAMPercentage=40.0 -XX:MaxRAMPercentage=90.0 -XX:MinRAMPercentage=50.0 -XshowSettings:vm -version

VM settings:

Max. Heap Size (Estimated): 348.00M

Using VM: OpenJDK 64-Bit Server VM

openjdk version "10.0.2" 2018-07-17

OpenJDK Runtime Environment (build 10.0.2+13-Debian-2)

OpenJDK 64-Bit Server VM (build 10.0.2+13-Debian-2, mixed mode)

```

同时还可以通过-XX:InitialRAMPercentage、-XX:MaxRAMPercentage、-XX:MinRAMPercentage这些参数控制JVM使用的内存比率。因为很多Java程序在运行时会调用外部进程、申请Native Memory等,所以即使是在容器中运行Java程序,也得预留一些内存给系统的。所以-XX:MaxRAMPercentage不能配置得太大。

进行一步查阅资料,发现-XX:+UseContainerSupport这个标志选项在Java 8u191已经被backport到Java 8了。因此如果使用的jdk是Java 8u191之后的版本,上述那些JVM参数依然有效:

```

[root@infra02 ~]# docker run -m 400MB openjdk:8u191-alpine java -XX:+UseContainerSupport -XX:InitialRAMPercentage=40.0 -XX:MaxRAMPercentage=90.0 -XX:MinRAMPercentage=50.0 -XshowSettings:vm -version

VM settings:

Max. Heap Size (Estimated): 348.00M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_191"

OpenJDK Runtime Environment (IcedTea 3.10.0) (Alpine 8.191.12-r0)

OpenJDK 64-Bit Server VM (build 25.191-b12, mixed mode)

```

1、使用前文说明的-XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap -XX:MaxRAMFraction=2

2、或是backport 的 -XX:+UseContainerSupport

容器运行时会将容器的quota等cgroup目录挂载进容器,因此可以通过entrypoint脚本自行读取这些信息,并给JVM设置合理的-Xms、-Xmx等参数

这个方案的优势是,linux下top, free 等都会调整过来。

默认情况下,JVM的Max Heap Size是系统内存的1/4,也就是32G (node 128G)作为最大JVM堆内存。但是,当应用运行的总内存,超过Kubernetes的声明限制的2G时,应用就会触发Kubernetes集群定义的资源超配额限制,自动重启此应用POD。于是,我们看到的现象,就是这个应用有越来越多的restart。

四、无入侵的资源可见性方案

在容器中,如果我们打印/proc/cpuinfo或是/proc/meminfo,取到的是物理机的核数和内存大小,但实际上容器必然是会有资源限制的,这会误导容器环境中的进程,使得一些预期中的优化变成了负优化。如线程数、GC的默认设置。

解决方案有三个:

1. java/golang/node启动时手动参数传入资源最大限制

2. java8u131+和java9+添加-XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap;java8u191+和java10+默认开启UseContainerSupport,无需操作;但是这些手段无法修正进程内直接读取/proc下或者调用top、free -m、uptime等命令输出的内容

3. 改写相关内核参数,对任意程序都有效果

前两种方案,侵入性较高,我们选择使用第三种方案,改写相关内核参数,使用lxcfs实现,yaml中使用hostPath装载

与cpu/内存相类似的还有uptime、diskstats、swaps等信息,改写后容器内top、free -m、uptime等命令都会显示正确。

值得注意的是cpu的限制,容器中所谓的cpu限制,并不是绑定独占核,而是限制使用时间。 举个例子:一台4核的物理机,能并行4个线程;而一台32核的宿主机上起一个限制为4核的容器,它仍然能并行32个线程,只不过每个核只能占用1/8的时间片。

LXCFS的DaemonSet方式部署,lxcfs-daemonset.yaml

https://github.com/denverdino/lxcfs-initializer.git

apiVersion: apps/v1beta2

kind: DaemonSet

metadata:

name: lxcfs

labels:

app: lxcfs

spec:

selector:

matchLabels:

app: lxcfs

template:

metadata:

labels:

app: lxcfs

spec:

hostPID: true

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: lxcfs

image: registry.cn-hangzhou.aliyuncs.com/denverdino/lxcfs:3.0.4

imagePullPolicy: Always

securityContext:

privileged: true

volumeMounts:

- name: cgroup

mountPath: /sys/fs/cgroup

- name: lxcfs

mountPath: /var/lib/lxcfs

mountPropagation: Bidirectional

- name: usr-local

mountPath: /usr/local

volumes:

- name: cgroup

hostPath:

path: /sys/fs/cgroup

- name: usr-local

hostPath:

path: /usr/local

- name: lxcfs

hostPath:

path: /var/lib/lxcfs

type: DirectoryOrCreate

相关配置,lxcfs-config.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: lxcfs-initializer-default

namespace: default

rules:

- apiGroups: ["*"]

resources: ["pods"]

verbs: ["initialize", "update", "patch", "watch", "list"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: lxcfs-initializer-service-account

namespace: default

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: lxcfs-initializer-role-binding

subjects:

- kind: ServiceAccount

name: lxcfs-initializer-service-account

namespace: default

roleRef:

kind: ClusterRole

name: lxcfs-initializer-default

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

initializers:

pending: []

labels:

app: lxcfs-initializer

name: lxcfs-initializer

spec:

replicas: 1

selector:

matchLabels:

app: lxcfs-initializer

template:

metadata:

labels:

app: lxcfs-initializer

spec:

serviceAccountName: lxcfs-initializer-service-account

containers:

- name: lxcfs-initializer

image: registry.cn-hangzhou.aliyuncs.com/denverdino/lxcfs-initializer:0.0.4

imagePullPolicy: Always

args:

- "-annotation=initializer.kubernetes.io/lxcfs"

- "-require-annotation=true"

---

apiVersion: admissionregistration.k8s.io/v1alpha1

kind: InitializerConfiguration

metadata:

name: lxcfs.initializer

initializers:

- name: lxcfs.initializer.kubernetes.io

rules:

- apiGroups:

- "*"

apiVersions:

- "*"

resources:

- pods

附注:

1、需要开启 Initializers,如下所示

vi /app/kubernetes/cfg/kube-apiserver

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction,**Initializers** \

**--runtime-config=admissionregistration.k8s.io/v1alpha1** \

admission-control 已变更为 enable-admission-plugins

2、腾讯云托管集群已支持,需要手动开启

验证

docker run --rm -it -m 200m \

-v /var/lib/lxcfs/proc/cpuinfo:/proc/cpuinfo:rw \

-v /var/lib/lxcfs/proc/diskstats:/proc/diskstats:rw \

-v /var/lib/lxcfs/proc/meminfo:/proc/meminfo:rw \

-v /var/lib/lxcfs/proc/stat:/proc/stat:rw \

-v /var/lib/lxcfs/proc/swaps:/proc/swaps:rw \

-v /var/lib/lxcfs/proc/uptime:/proc/uptime:rw \

ubuntu bash

yaml中新增如下anotation配置,"initializer.kubernetes.io/lxcfs": "true"

web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

template:

metadata:

annotations:

"initializer.kubernetes.io/lxcfs": "true"

labels:

app: web

spec:

containers:

- name: web

image: httpd:2.4.32

imagePullPolicy: Always

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "256Mi"

cpu: "500m"

五、参考

<https://medium.com/adorsys/jvm-memory-settings-in-a-container-environment-64b0840e1d9e>

<https://developers.redhat.com/blog/2017/04/04/openjdk-and-containers/>

附

resources:

requests:

memory: "100Mi"

cpu: "10m"

limits:

memory: "1024Mi"

cpu: "500m"

[root@sit-node-001 developer]

bash-4.4

VM settings:

Max. Heap Size (Estimated): 247.50M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (IcedTea 3.12.0) (Alpine 8.212.04-r0)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

then change the

bash-4.4# java -XX:+UseContainerSupport -XX:InitialRAMPercentage=40.0 -XX:MaxRAMPercentage=90.0 -XX:MinRAMPercentage=50.0 -XshowSettings:vm -version

VM settings:

Max. Heap Size (Estimated): 891.31M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (IcedTea 3.12.0) (Alpine 8.212.04-r0)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

bash-4.4# java -XX:+UseContainerSupport -XX:MaxRAMPercentage=90.0 -XshowSettings:vm -version

VM settings:

Max. Heap Size (Estimated): 891.31M

Ergonomics Machine Class: server

Using VM: OpenJDK 64-Bit Server VM

openjdk version "1.8.0_212"

OpenJDK Runtime Environment (IcedTea 3.12.0) (Alpine 8.212.04-r0)

OpenJDK 64-Bit Server VM (build 25.212-b04, mixed mode)

需要在 orchestration yaml文件中

- name: ARGS

value: " -XX:+UseContainerSupport -XX:MaxRAMPercentage=90.0 -javaagent://jacocoagent.jar=includes=*,output=tcpserver,address=0.0.0.0,port=6300 -javaagent://pinpoint-agent/pinpoint-bootstrap-2.0.1.jar -Dpinpoint.agentId=adminapiexplorer -Dpinpoint.applicationName=adminapiexplorer -Dapp.id=admin-developer-apiexplorer -Dapollo.meta=http://apollo-config-svc:8060 -Denv=dev -Dapollo.bootstrap.namespaces=application,TEST1.apiexplorer-mybatis-db,swagger,TEST1.redis,idaas,TEST1.actuator"

限额后,需要在java jvm 启动参数加上响应的 -XX:+UseContainerSupport -XX:MaxRAMPercentage=90.0 ,否则默认是 1/4 的limit.memory 内存作为最大的jvm heap 内存

resources:

requests:

memory: "100Mi"

cpu: "10m"

limits:

memory: "1024Mi"

cpu: "500m"

" -XX:+UseContainerSupport -XX:MaxRAMPercentage=90.0"

评论